I have been posting article related to CLARiiON recently. Lets talk about DMX Series. It is the most popular storage array from EMC. To manage DMX we have tool called ECC ( EMC Control Center, SMC and SYMCLI). Let me first explain what is gatekeeper and what is use?

To perform software operations on the Symmetrix, we use the low level SCSI command. To send SCSI commands to the Symmetrix, we need to open a device. We refer to the device we open as a gatekeeper. Symmetrix gatekeepers provide communication paths into the Symmetrix for external software monitoring and/or controlling the Symmetrix. There is nothing special about a gatekeeper, any host visible device can be used for this purpose. We do not actually write any data on this device, nor do we read any date from this device. It is simply a SCSI target for our special low level SCSI commands. We do perform I/O to these devices and this can interfere with applications using the same device. This is why we always configure small devices (NO data is actually stored) and map them to hosts to be used as "gatekeepers". Solutions Enabler will consider any device with 10 cylinders or less to be the preferred gatekeeper device.

Now you must have understood what is gatekeeper devices. Let me explain more about uses like how many gatekeeper you want to present host so there will be no performance impact.

A general recommendation for the number of gatekeepers for a single host with few applications running is 8. However, a common rule of thumb is a minimum of 6. For a host on which many applications running, 16 is recommended. A gatekeeper should not be mapped/masked to more than one host.

Granular Recommendation (for Solutions Enabler) :

It is hard to recommend an exact number of gatekeepers required for a single host. The number of gatekeeper's required depends on the specific host's configuration and role. This is because it directly relates to performance, therefore it is a subjective number. The following is a recommendation based upon a number of variables pertaining to that specific host. At least 2 gatekeepers for simple Solutions Enabler commands and 1 additional gatekeeper for each daemon or EMC Control Center Agent running on the host.

Granular Recommendation (for Control Center) :

• Two gatekeepers are required when the Storage Agent for Symmetrix is installed on the host, and two (total of four) additional gatekeepers are required when you use Symmetrix Configure commands to manage the Symmetrix system. Each Symmetrix system needs only two Symmetrix agents. The agents need to be installed on separate hosts. This is to provide for

failover when the primary agent goes down for maintenance (or failure).

• Common Mapping Agent and host agents do not require a gatekeeper.

• Note: SYMCLI scripts should not use gatekeepers assigned to ControlCenter; they should have their own.

Gatekeepers are typically 6 cylinders or 2888 KB in Size. All gatekeeper type devices should be protected by either RAID-1 or RAID-S.

Hope this will help you in deciding number of gatekeeper to assign the host.Because it is matter of performance.

What is “Tier 0” in Storage Environments?

Tier "0" is not new in storage market but for implementation purposes it has been difficult to accommodate because it requires best performance and lowest latency. Enterprise Flash disks (Solid State Disks) capable to meet this requirement. It is possible to get more performance for company most critical applications. The performance can be gained through using Flash drives supported in VMAX and DMX-4 systems. Read More →

If you have a multihomed host and are running like :

· IBM AIX,

· HP-UX,

· Linux,

· Solaris,

· VMware ESX Server (2.5.0 or later), or

· Microsoft Windows

you must create a parameter file for Navisphere Agent, named agentID.txt

About the agentID.txt file:

Line1: Fully-qualified hostname of the host

Line 2: IP address of the HBA/NIC port that you want Navisphere Agent to use

For example, if your host is named host28 on the domain mydomain.com and your host contains two HBAs/NICs, HBA/NIC1 with IP address 192.111.222.2 and HBA/NIC2 with IP address 192.111.222.3, and you want the Navisphere Agent to use NIC 2, you would configure agentID.txt as follows:

host28.mydomain.com

192.111.222.3

To create the agentID.txt file, continue with the appropriate procedure for your operating system:

1. Using a text editor that does not add special formatting, create

or edit a file named agentID.txt in either / (root) or in a directory of your choice.2. Add the hostname and IP address lines as described above. This file should contain only these two lines, without formatting.

3. Save the agentID.txt file.

4. If you created the agentID.txt file in a directory other than root, for Navisphere Agent to restart after a system reboot using the correct path to the agentID.txt file, set the environment variable EV_AGENTID_DIRECTORY to point to the directory where you created agentID.txt.

5. If a HostIdFile.txt file is present in the directory shown for your operating system, delete or rename it. The HostIdFile.txt file is located in the following directory for your operating system:

AIX :- /etc/log/HostIdFile.txt

HP-UX :- /etc/log/HostIdFile.txt

Linux :- /var/log/HostIdFile.txt

Solaris :- /etc/log/HostIdFile.txt

6. Stop and then restart the Navisphere Agent.

NOTE: Navisphere may take some time to update, however, it should update within 10 minutes.

7. Once the Navisphere Agent has restarted, verify that Navisphere Agent is using the IP address that is entered in the agentID.txt file. To do this, check the new HostIdFile.txt file. You should see the IP address that is entered in the agentID.txt file.The HostIdFile.txt file is in the following directory for your operating system:

AIX :/etc/log/HostIdFile.txt

HP-UX :/etc/log/HostIdFile.txt

Linux :-/var/log/HostIdFile.txt

Solaris :-/etc/log/HostIdFile.txt

For VMware ESX Server 2.5.0 and later

1. Confirm that Navisphere agent is not installed.

2. Using a text editor that does not add special formatting, create or edit a file named agentID.txt in either / (root) or in a directory of your choice.

3. Add the hostname and IP address lines as described above. This file should contain only these two lines, without formatting.

4. Save the agentID.txt file.

5. If you created the agentID.txt file in a directory other than root, for subsequent Agent restarts to use the correct path to the agentID.txt file, set the environment variable EV_AGENTID_DIRECTORY to point to the directory where you created agentID.txt.

6. If a HostIdFile.txt file is present in the /var/log/ directory, delete or rename it.

7. Reboot the VMWARE ESX server.

8. Install and start Navisphere Agent and confirm that it has started.

NOTE: Before installing Navisphere Agent, refer to the EMC Support Matrix and confirm that you are installing the correct version.

NOTE: If necessary, you can restart the Navisphere Agent

NOTE: Navisphere may take some time to update, however, it should update within 10 minutes.

9. Once the Navisphere Agent has restarted, verify that Navisphere Agent is using the IP address that is entered in the agentID.txt file. To do this, check the new HostIdFile.txt file which is located in the /var/log/ directory. You should see the IP address that is entered in the agentID.txt file.

For Microsoft Windows:

1. Using a text editor that does not add special formatting, create a file named agentID.txt in the directory C:/ProgramFiles/EMC/Navisphere Agent.

2. Add the hostname and IP address lines as described above. This file should contain only these two lines, without formatting.

3. Save the agentID.txt file.

4. If a HostIdFile.txt file is present in the C:/ProgramFiles/EMC/Navisphere Agent directory, delete or rename it.

5. Restart the Navisphere Agent

6. Once the Navisphere Agent has restarted, verify that Navisphere Agent is using the correct IP address that is entered in the agentID.txt file. Either:

· In Navisphere Manager, verify that the host IP address is the same as the IP address that you you entered in the agentID.txt file. If the address is the same, the agentID.txt file is configured correctly.

· Check the new HostIdFile.txt file. You should see the IP address that is entered in the agentID.txt file.

We have discussed about CLARiiON. How to create LUN,RAID Group etc. before I shold discuss about adding storage to host. I must discuss about Navisphere Host agent. This is most important service/daemon which runs on host and communicate with CLARiiON. Without Host agent you can not resgister host with storage group automatically. Then if you want register host with Navishere Host agent then you need to register manually.

· Send drive mapping information to the attached CLARiiONstorage systems.

· Monitor storage-system events and can notify personnel by email, page, or modem when any designated event occurs.

· Retrieve LUN WWN (world wide name) and capacity information from Symmetrix storage systems.

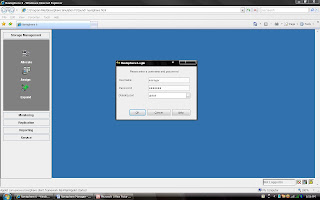

You can change the storage system password in Navisphere Manager as follows:

1. Open Navisphere Manager

2. Click Tools > Security > Change Password.

3. In the Change Password window, enter the old (current) password in the Old Password text box.

4. Enter the new password in the New Password text box and then enter it again in the Confirm New Password text box.

5. Click OK to apply the new password or Cancel to keep the current password.

6. In the confirmation popup, click either Yes to change the password or No to cancel the change and retain your current (old) password.

Note: If you click Yes, you will briefly see "The operation successfully completed" and then you will be disconnected. You will need to log back in using the new password.

I have discussed about lab exercise for Storage Administration purpose, Now, Lets talk about something technical. You understand that we can create Raid 5 protected LUN means we will use striping. So, How will you calculate stripe size of LUN.

Before calculating the stripe size of data in Clariion , we have discussed about how many disks make up the Raid Group, as well as the Raid Type.

that of the five disks that make up the Raid Group, four of the disks are used to store the data, and the remaining disk is used to store the parity information for the stripe of data in the event of a disk failure and rebuild. In Clariion settings of a disk format in which it formats the disk into 128 blocks for the Element Size (amount of blocks written to a disk before writing/striping to the next disk in the Raid Group), which is equal to the 64 KB Chunk Size of data that is written to a disk before writing/striping to the next disk in the Raid Group. To determine the Data Stripe Size, we simply calculate the number of disks in the Raid Group for Data (4) x the amount of data written per disk (64 KB), and get the amount of data written in a Raid 5, Five disk Raid Group (4 + 1) as 256 KB of data. To get the Element Stripe Size, we calculate the number of disks in the Raid Group (4) x the number of blocks written per disk (128 blocks) and get the Element Stripe Size of 512 blocks.The next example of another Raid 5 group, however the number of disks in the Raid Group is nine (9). This is combined to as 8 + 1. Again, eight (8) disks for data, and the remaining disk is used to store the parity information for the stripe of data.

To determine the Data Stripe Size, we simply calculate the number of disks in the Raid Group for Data (8) x the amount of data written per disk (64 KB), and get the amount of data written in a Raid 5, Five disk Raid Group (8 + 1) as 512 KB of data. To get the Element Stripe Size, we calculate the number of disks in the Raid Group (8) x the number of blocks written per disk (128 blocks) and get the Element Stripe Size of 1024 blocks.

In summary: The Stripe Size again is the amount of data written to a stripe of the Raid Group, and the Element Stripe Size is the number of blocks written to a stripe of a Raid Group.

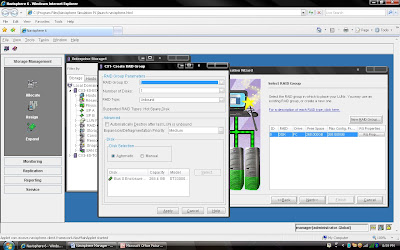

Once again welcome to CLARiiON Lab Exercise II. Today I will be demonstrating about RAID Group creation and LUN binding on CX Frame. we have understand the storage allocation using Allocation Wizard। But some times host is not registered on HBA is not logged in। Then you can not run the storage allocation wizard. It will fail to allocate storage. Then you need to do step by step.

I tried to cover each and every step on the following Lab Exercise. if anybody has question feel free to ask.

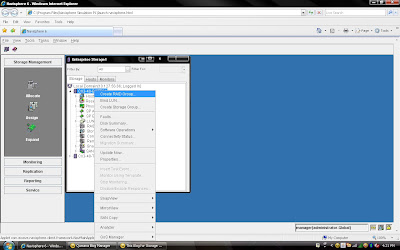

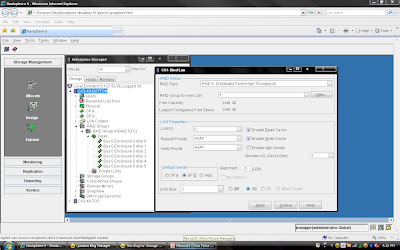

1) Login to Navisphere Manager and Select the CX frame where you want to create RAID Group. for example I am going to create RAID Group from CX3-40-Bottom frame. Once you have selected the frame then Right Click. It will list sub menu, please select Create Raid Group.

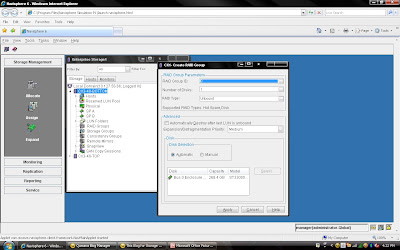

2) Once you have selected create group, it will pop up raid group creation wizard. Here you have to select so many option depending on your requirement. First select RAID Group ID. This is unique id. Then select how many disk. what it means? Let discuss for example you want to create RAID 5(3+1) then you should select 4 disk. Means there will be 4 spindle

2) Once you have selected create group, it will pop up raid group creation wizard. Here you have to select so many option depending on your requirement. First select RAID Group ID. This is unique id. Then select how many disk. what it means? Let discuss for example you want to create RAID 5(3+1) then you should select 4 disk. Means there will be 4 spindle and striping will be done on 3 disk and one will be for parity. If you want to create more spindle for performance then select more disk with raid 5 configuration like you can select 8 disk for raid 5 (7+1).Here you have option to allocate direct disk as well. then you can select disk only. Now, you have understood how to select number of disk. Next things select raid protection level.

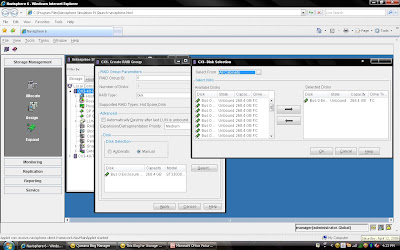

Rest, you can select default. but suppose you want to create raid group on particular disk because in same CX Frame you can have different type of disk then you can select manual then select particular disk. under disk selection tab.

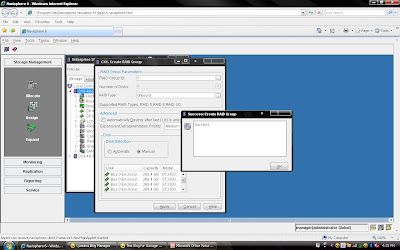

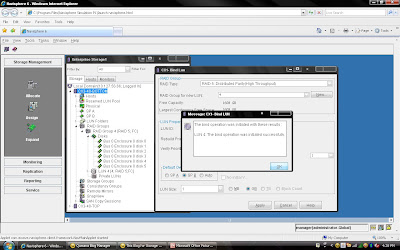

Rest, you can select default. but suppose you want to create raid group on particular disk because in same CX Frame you can have different type of disk then you can select manual then select particular disk. under disk selection tab. Once you have selected the all the value you can click apply. It will create RAID Group with given configuration. It will pop up message that RAID Group Created Successfully.If you want to create more group you can create using wizard otherwise click cancel.

Once you have selected the all the value you can click apply. It will create RAID Group with given configuration. It will pop up message that RAID Group Created Successfully.If you want to create more group you can create using wizard otherwise click cancel. Once you have created Raid Group you can bind the lun. What is meaning of binding? Lets focus what happen when you created RAID Group. Raid Group is something like storage pool. It will create usable storage space according to number of disk you have selected and raid group type. For example we have created Raid Group 4 and it size is 1600 GB. But you want to allocate 5 LUN each LUN size would be 100 GB or any combination of size until you you exhaust the space of the raid group. So it is call binding the LUN. Means, you have taken out some space for each LUN and allocated to Host. Each LUN will have own properties but will belong to Raid Group 4. So in short when you select number of disk while creating a particular group. CLARiiON Operating System will not allow you to use same disk of some other raid protection level.

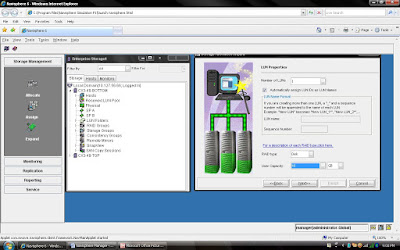

Once you have created Raid Group you can bind the lun. What is meaning of binding? Lets focus what happen when you created RAID Group. Raid Group is something like storage pool. It will create usable storage space according to number of disk you have selected and raid group type. For example we have created Raid Group 4 and it size is 1600 GB. But you want to allocate 5 LUN each LUN size would be 100 GB or any combination of size until you you exhaust the space of the raid group. So it is call binding the LUN. Means, you have taken out some space for each LUN and allocated to Host. Each LUN will have own properties but will belong to Raid Group 4. So in short when you select number of disk while creating a particular group. CLARiiON Operating System will not allow you to use same disk of some other raid protection level.lets now bind the LUN from Raid Group 4.

Again you either select particular Raid group of CX frame or select CX Frame and Right Click and select Bind the LUN.

You have already created Raid 5 and have available space on them so select Raid 5 in Raid Type. Select Raid Group number which belong to Raid 5, for example we have created Raid Group 4 as Raid 5 level protection. Once you have selected Raid Group it will show the space available on this group.In our case we have 1608 GB space available on raid group 4. Now, I want to create 50 GB space out of 1608 GB. Once you have selected Raid Group Number you can select LUN number and properties. like LUN name, LUN Count means how many LUN you want to create with Same size. In most of case all will be default value. Generally select Default Owner as auto. let system will decide who suppose to own the device. In ClARiiON there is concept LUN ownership, at any time only one Storage Processor can own the LUN. I will cover LUN Ownership model later. Once you have selected you can click apply.

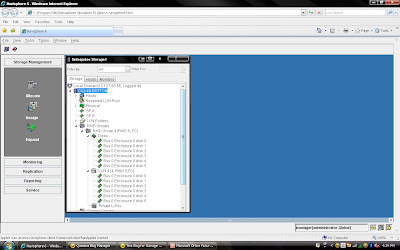

Once you have created all the LUN you can cancel that window and expand the particular raid group you can see that LUN will be listed.

Once you have created all the LUN you can cancel that window and expand the particular raid group you can see that LUN will be listed.

This is end of CLARiiON Lab exercise II. I will be covering next class of following topic:

1) How to create Storage Group?

2) Assigned LUN to Storage Group.

3) Present LUN to Host.

EMC Brings Flash Drives in DMX-4 Frame

EMC made a important announcement with respect to its 73 GB and 146 GB flash drives or solid state drives (SSD). Flash Drives and SSD represent a new solid-state storage tier, “tier 0”, for symmetrix DMX-4. In addition to that EMC will offer Virtual Provisioning for Symmetrix 3 and 4 as well 1 TB SATA II drives.

With this announcement EMC became first storage vendor to integrate Flash Technology into its enterprise-class arrays. There as excitement in industry who is looking for faster transaction and performance. Why this much excitement for new customer? I will be discussing some technical stuff in coming paragraph.

With flash drive technology in a Symmetrix DMX-4 storage system, a credit card provider could clear up to six transactions in the time it once took to process a single transaction. Overall, EMC’s efforts could significantly alter the dynamics of the flash SSD market, where standalone flash storage systems have been available only from smaller vendors.

storage system by about 10%. But in high-end business applications where every bit of IOPS performance counts, that premium becomes entirely acceptable. When an organization truly needs a major boost then flash drives are a very real and very reasonable solution.

# Flash dives manufactured by STEC,Inc

# DMX-4 uses RAID 5(3+1) and (7+1) for flash drives

# Flash drives will operate on a 2GB/s FC loop

# Flash drives have in two size 73 GB and 146 GB 3।5” FC drive form factor at 2GB/s

# Flash drives support both format FBA and CKD emulation

# Limitation: - All the members must be Flash drives within same quadrant। (DA pair)

# Must have at least one flash drive as a Hot Spare।

# RAID 1 and RAID 6 are also in qualification

# Mixing Flash & Disk drives on the same loop is allowed

# PowerVault drives must be hard disk drives

# Maximum 32 flash drives per DA

# Cache Partiioning and QOS priority control highly recommended

# Flash Drives cab be protected with TimeFinder and SRDF

Most Suitable for Customer like:

# Currency Exchange & Arbitrage

# Trade Optimization

# Real Time Data/Feed Processing

# Credit Card Fraud Detection

# Contextual Web Advertising

# Real Time Transaction Systems

# Data Modeling & Analysis

One Flash drive can deliver IOPS equivalent to 30 15K hard disk drives with approximately 1 ms application response time. This means Flash memory achieves unprecedented performance and the lowest latency ever available in an enterprise-class storage array.

I am going to demonstrate full LAB exercise of CLARiiON. If anybody interested to any specific LAB exercise please send me mail I will try to help and give LAB exercise. There are many exercise like:

1) Create RAID Group

2) Bind the LUN

3) Create Storage Group

4) Register the Host

5) Present LUN to Host

6) Create Meta LUN etc.

CLARiiON LAB Session -I

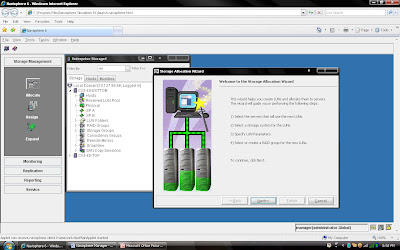

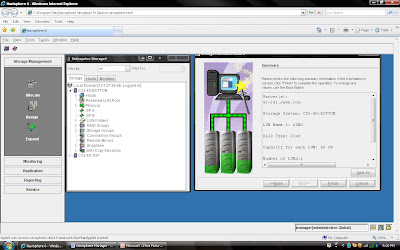

I am going to demonstrate LAB Exercise for Allocation Storage to Host from CX Array using Allocation Wizard of Navisphere Manager. I will be giving demo other method as well like allocating storage without wizard because some time host will not login to CX Frame. I will be discussing command line as well who are more interested in scripting.

Steps 1:

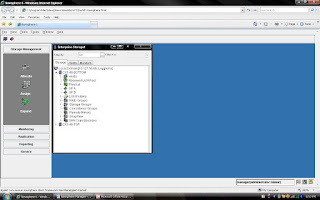

Login to Navisphere Manager ( Take any IP of any SP’s in your domain and type on browser).

You can see the all the clariion listing under each Domain.

Steps 2: Click Allocation on Left Side Menu Tree.

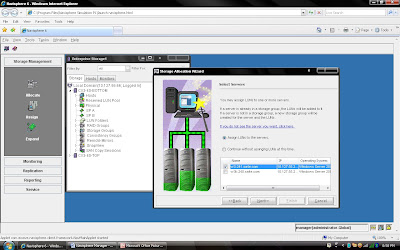

Steps 3: Click next once you have selected Host name (Whom you are going to present LUN)

You can select Assign LUN

to this server or you can continue without assigning.

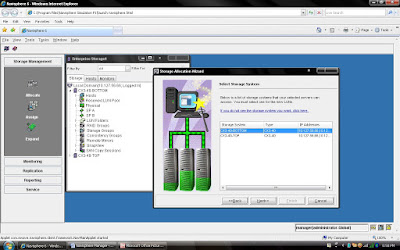

Steps 4: Select Next and Select CX frame where you want to create LUN.

Steps 5: Select Next, If you have created RAID Group It will be listed here otherwise you can create new Raid Group by selecting New Raid Group.( I will be discussing later how to create different RAID Group)

Steps 6: Select RAID Group ID and depending on Raid Group select number of disk for example if you are creating Raid 5 (3+1) then select 4 disks.

Once You have created raid group. It will list under RAID Group dialog box.Click Next and select the Number of LUN you want to create on same RAID Group. For example RAID Group created for 3+1 disk of 500 GB each disk means you can use roughly 500X4X70% GB. Now you want to create different size of each LUN on the same RAID Group

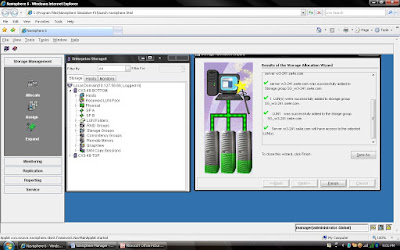

Steps 7: Once you have selected Number of LUN and Size of LUN. You can verify the configuration before you run the finish button.

Steps 8: Once you click the Finish Button you can see the status. System will create Storage Group with Server Name (You can change storage group name later) and add created LUN into storage Group.

You can verify the entire configuration by clicking storage group name:

A RAID appears to the operating system as a single logical hard disk. RAID employs the technique of disk striping, which involves partitioning each drive's storage space into units ranging from a sector (512 bytes) up to several megabytes. The stripes of all the disks are interleaved and addressed in order.

In a single-user system where large records, such as medical or other scientific images, are stored, the stripes are typically set up to be small (perhaps 512 bytes) so that a single record spans all disks and can be accessed quickly by reading all disks at the same time. In a multi-user system, better performance requires establishing a stripe wide enough to hold the typical or maximum size record. This allows overlapped disk I/O across drives.There are at least nine types of RAID, as well as a non-redundant array (RAID-0).

This technique has striping but no redundancy of data. It offers the best performance but no fault-tolerance.

RAID-1:

This type is also known as disk mirroring and consists of at least two drives that duplicate the storage of data. There is no striping. Read performance is improved since either disk can be read at the same time. Write performance is the same as for single disk storage. RAID-1 provides the best performance and the best fault-tolerance in a multi-user system.

RAID-2:

This type uses striping across disks with some disks storing error checking and correcting (ECC) information. It has no advantage over RAID-3.

RAID-3:

This type uses striping and dedicates one drive to storing parity information. The embedded error checking (ECC) information is used to detect errors. Data recovery is accomplished by calculating the exclusive OR (XOR) of the information recorded on the other drives. Since an I/O operation addresses all drives at the same time, RAID-3 cannot overlap I/O. For this reason, RAID-3 is best for single-user systems with long record applications.

RAID-4:

This type uses large stripes, which means you can read records from any single drive. This allows you to take advantage of overlapped I/O for read operations.

Since all write operations have to update the parity drive, no I/O overlapping is possible. RAID-4 offers no advantage over RAID-5.RAID-5:

This type includes a rotating parity array, thus addressing the write limitation in RAID-4. Thus, all read and write operations can be overlapped. RAID-5 stores parity information but not redundant data (but parity information can be used to reconstruct data). RAID-5 requires at least three and usually five disks for the array. It's best for multi-user systems in which performance is not critical or which do few write operations.

RAID-6:

This type is similar to RAID-5 but includes a second parity scheme that is distributed across different drives and thus offers extremely high fault- and drive-failure tolerance.

RAID-7:

This type includes a real-time embedded operating system as a controller, caching via a high-speed bus, and other characteristics of a stand- alone computer. One vendor offers this system.

RAID-10:

Combining RAID-0 and RAID-1 is often referred to as RAID-10, which offers higher performance than RAID-1 but at much higher cost. There are two subtypes: In RAID-0+1, data is organized as stripes across multiple disks, and then the striped disk sets are mirrored. In RAID-1+0, the data is mirrored and the mirrors are striped.

RAID-50 (or RAID-5+0):

This type consists of a series of RAID-5 groups and striped in RAID-0 fashion to improve RAID-5 performance without reducing data protection.

RAID-53 (or RAID-5+3):

This type uses striping (in RAID-0 style) for RAID-3's virtual disk blocks. This offers higher performance than RAID-3 but at much higher cost.

RAID-S (also known as Parity RAID):

This is an alternate, proprietary method for striped parity RAID from Symmetrix that is no longer in use on current equipment. It appears to be similar to RAID-5 with some performance enhancements as well as the enhancements that come from having a high-speed disk cache on the disk array.

This is new series of Symmetrix family with many new features a high performance, DMX (Direct Matrix Architecture). I will discuss what DMX Architecture is later.

There are two models in DMX-4 series the DMX-4 and the DMX-4 950. DMX-4 supports full connectivity to open system and mainframe hosts like ESCON and FICON.

The DMX-4 950 represents a lower entry point for DMX technology providing open system connectivity with FICON connection for mainframe hosts.

The DMX-4 is the world’s largest high end storage array, allowing configure from 96 to 2400 drives in a single system. Yes, 2400 drives!! Means you can have peta-byte storage in one box.

Main Features of DMX 4 are:

Mainframe Connectivity

4 Gb/s back-end support

Point to Point connection

SATA II drives support

Support Enginuity 5772 ( Enginuity is the Operating System for DMX)

Improved RAID 5 performance via multiple location RAID XOR calculation

Partial sector read hit improvement

128 TimeFinder/Snap session of the same source volume.

Improvements in TimeFinder/Clone create and terminate times upto 10 times.

For SRDF, synchronous response time improvement up to 33 times.

Avoiding COFW (Copy on First Write) for TimeFinder/Clone target devices.

Symmetrix Virtual LUN

Clone to larger target device

RSA technology integrate called new feature Symmetrix Audit Log.

Improve Power Efficiency

RAID 6 supports

Separate Console to manage Symmetrix Management Console

DMX -4 Performance Feature:

Data Path: - 32-128

Data Bandwidth:- 32-128 GB/s

Message Bandwidth:- 4-6.4 GB/s

Global Memory:- 16-512 GB

DMX-4 Storage Capacity:

DMX-4 offers 73 GB, 146 GB, 300 GB and 400 GB FC Drives

DMX-4 offers 73 GB, 146 GB Flash Drives, 500 GB and 1 TB SATA II disk drives

Storage Protection:

Mirrored (RAID 1) – Not supported with Flash Drives

RAID 10, RAID 1/0 – Not supported with Flash Drives

SRDF

RAID 5(3+1) or RAID 5(7+1)

Raid 6 (6+2) or RAID 6 (14=2)- Not supported with Flash Drives.

In normal customer environment, You do day to day activity like allocation storage to different Operating System. If you do not follow best practice then you will see the host facing performance issue. Because in SAN environment, there are so many things to be consider before we present any SAN storage to any HOST. Now, I am discussing what is the best practice for DMX/SYMM? First understand Customer requirement like what application they are running, what is protection level like DMX support RAID 1/0.RAID 5(3+1) , RAID 5(7+1) etc, Are they using different disk alignment. Once you understand the Customer environment then you need to plan about disk configuration at back-end means on DMX.

In Summary decision need to be taken balance off all:

1)The number of physical disk slices

2)The number of meta volume members

3)Channel capacity

4)Host administration required

5)Performance required

6)Suitability for future expansion

As a simple general guide use the following:

(Ideal) Create 18570 volumes, all volumes striped @1MB in host stripe set

(Good) Create 18570 volumes, create metas with 4-8 members

(OK) Create 18570 volumes, create metas with 16 members

(Warn) Create 18570 volumes, create metas with 17+ members

Smaller split sizes can be used for small datasets, and combined into meta volumes for extra-performance with high-load but small-capacity applications.

Larger split sizes are more suitable for larger datasets.A good tip is to have the volumes for an application spread over a minimum 8 physical disks where possible, with only 1 volume per physical.Note most hosts can only see max 512 host volumes (1 metavolume = 1 host volume, 1 non-meta volume = 1 host volume) per channel.

Most of time you end up thinking that we had 500 GB disk but it finish without utlizing full capacity. Answer for this that whatever size you buy you do not get full amount of size becuase DMX size calculation on cylinder basis. Lets understand how DMX calculation size of disk:

1) How DMX 2 and Symmetrix Covert cylinder to GB?

GB = Cylinders * 15 * 64 * 512 divided by 1024 * 1024 * 1024

for example 18570 cylinders is 8.5 GB

2) How DMX 2 and Symmetrix Covert GB to cylinder?

Cylinders = GB / 15 / 64 / 512 then multiply result by 1024*1024*1024

3) How much capacity do I get from a drive ?

This depends on the drive and splits required.

For example a 73GB drive with 8 splits gives 8 x 18570 cyl volumes = 68 GB.

4) How DMX 3 Covert cylinder to GB?

GB = Cylinders * 15 * 128 * 512 divided by 1024 * 1024 * 1024

for example 18570 cylinders is 17,00 GB

5) How DMX 2 and Symmetrix Covert GB to cylinder?

Cylinders = GB / 15 / 128 / 512 then multiply result by 1024*1024*1024

6) How much capacity do I get from a drive ?

This depends on the drive and splits required.

For example a 73GB drive with 4 splits gives 4 x 18570cyl volumes = 68 GB.

Now, you must have understand the formula of calcuating the actual size of disk you can use on any symmetrix or DMX.

About Me

- Diwakar

- Sr. Solutions Architect; Expertise: - Cloud Design & Architect - Data Center Consolidation - DC/Storage Virtualization - Technology Refresh - Data Migration - SAN Refresh - Data Center Architecture More info:- diwakar@emcstorageinfo.com