I have discussed about lab exercise for Storage Administration purpose, Now, Lets talk about something technical. You understand that we can create Raid 5 protected LUN means we will use striping. So, How will you calculate stripe size of LUN.

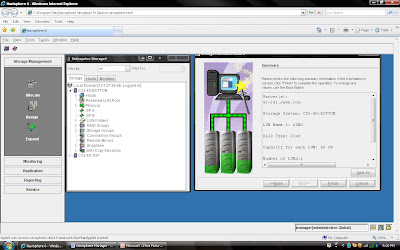

Before calculating the stripe size of data in Clariion , we have discussed about how many disks make up the Raid Group, as well as the Raid Type.

that of the five disks that make up the Raid Group, four of the disks are used to store the data, and the remaining disk is used to store the parity information for the stripe of data in the event of a disk failure and rebuild. In Clariion settings of a disk format in which it formats the disk into 128 blocks for the Element Size (amount of blocks written to a disk before writing/striping to the next disk in the Raid Group), which is equal to the 64 KB Chunk Size of data that is written to a disk before writing/striping to the next disk in the Raid Group. To determine the Data Stripe Size, we simply calculate the number of disks in the Raid Group for Data (4) x the amount of data written per disk (64 KB), and get the amount of data written in a Raid 5, Five disk Raid Group (4 + 1) as 256 KB of data. To get the Element Stripe Size, we calculate the number of disks in the Raid Group (4) x the number of blocks written per disk (128 blocks) and get the Element Stripe Size of 512 blocks.The next example of another Raid 5 group, however the number of disks in the Raid Group is nine (9). This is combined to as 8 + 1. Again, eight (8) disks for data, and the remaining disk is used to store the parity information for the stripe of data.

To determine the Data Stripe Size, we simply calculate the number of disks in the Raid Group for Data (8) x the amount of data written per disk (64 KB), and get the amount of data written in a Raid 5, Five disk Raid Group (8 + 1) as 512 KB of data. To get the Element Stripe Size, we calculate the number of disks in the Raid Group (8) x the number of blocks written per disk (128 blocks) and get the Element Stripe Size of 1024 blocks.

In summary: The Stripe Size again is the amount of data written to a stripe of the Raid Group, and the Element Stripe Size is the number of blocks written to a stripe of a Raid Group.

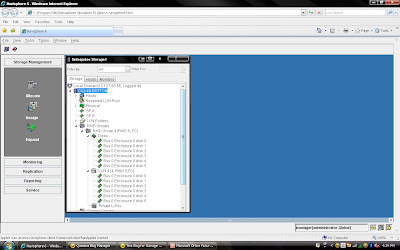

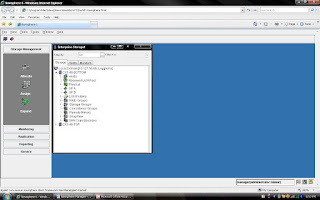

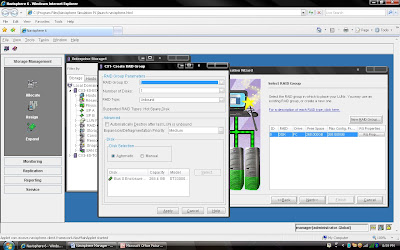

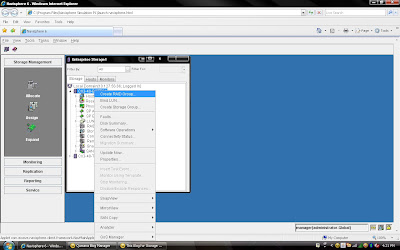

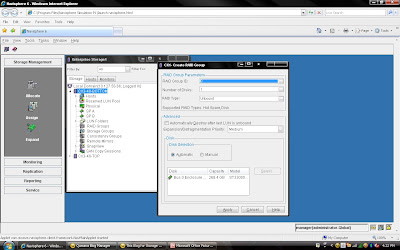

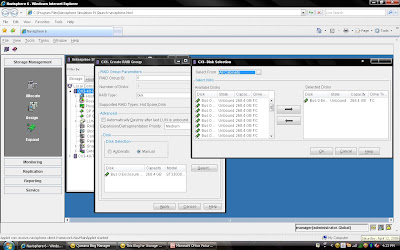

2) Once you have selected create group, it will pop up raid group creation wizard. Here you have to select so many option depending on your requirement. First select RAID Group ID. This is unique id. Then select how many disk. what it means? Let discuss for example you want to create RAID 5(3+1) then you should select 4 disk. Means there will be 4 spindle

2) Once you have selected create group, it will pop up raid group creation wizard. Here you have to select so many option depending on your requirement. First select RAID Group ID. This is unique id. Then select how many disk. what it means? Let discuss for example you want to create RAID 5(3+1) then you should select 4 disk. Means there will be 4 spindle Rest, you can select default. but suppose you want to create raid group on

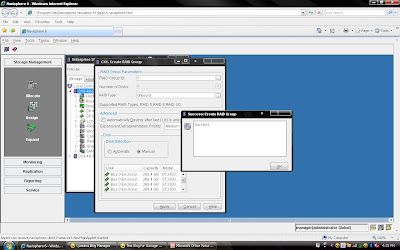

Rest, you can select default. but suppose you want to create raid group on  Once you have selected the all the value you can click apply. It will create RAID Group with given configuration. It will

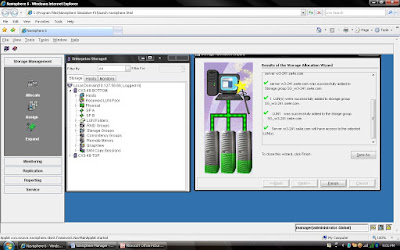

Once you have selected the all the value you can click apply. It will create RAID Group with given configuration. It will  Once you have created Raid Group you can bind the

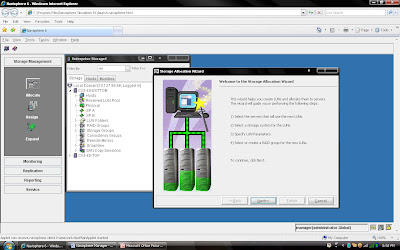

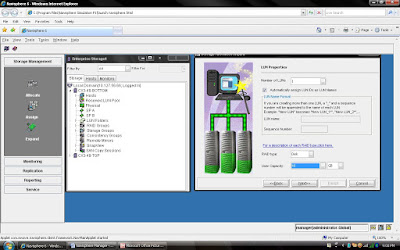

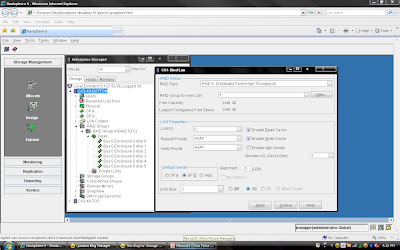

Once you have created Raid Group you can bind the

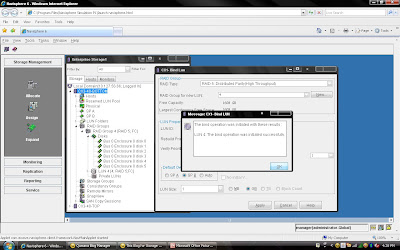

Once you have created all the

Once you have created all the